TSPSolver.jl: Using Bonobo.jl to solve our first instance

Creation date: 2022-05-06

Haven't been active on this blog for a while but now I'm finally back. About 6 months ago, before I started my job, I read a bit about one of my favorite problems, the Travelling salesman problem (TSP). In my first post I played a bit with a greedy strategy of finding a tour which isn't the best but gives a fast and hopefully not too bad upper bound. Though I focused more on an algorithm to find a good lower bound. You can read my findings about the 1-tree lower bound and the greedy upper bound before checking out this one.

Though I'll also mention some of the stuff here again as the greedy implementation needed some update. My second post of this series was about branch and bound. That one had basically nothing to do with the TSP solver itself but with a very general framework of how to solve a variety of optimization problems.

In this post I want to combine the two posts and finally have a version of a TSP solver that can actually solve simple TSPs without taking years of compute time.

The general idea

Let me explain the general idea again of how we want to find a tour which starts at one point, visits all the other points and goes back to the starting point and minimizes the tour cost. My solver will only work on symmetric problems for now. That means when we have two points, A and B, the distance from A to B is the same as from B to A. This makes some parts a lot easier.

I discussed in the first post on how to find a tree or more precisely a 1-tree which is a good lower bound of the tour cost. That means we can be sure that the tour cost of the shortest tour can't be shorter than that lower bound. Additionally we found a tour which tries to minimize the cost in each step we take. This is called a greedy tour. We now most likely have a gap between the lower and upper bound. Something like it can't be shorter than 100km and we found one which is 150km long. The optimal tour has a length of something in between.

The idea now is to force the lower and upper bound to use a specific edge or disallow that they use this edge. This way we get a new lower and upper bound and have two problems instead of one. These two problems are a bit easier to solve than the original one but now we have two of them.

That idea of branch and bound is the topic of my latest post in this series. Let's figure out how we can force a specific edge to be used or not get used in the 1-tree and the greedy search.

Fixing or disallowing an edge

In the 1-tree it's relatively simple. We can set the cost of an edge to 0 to make it as cheap as possible to the minimum spanning tree algorithm to use that edge. Similarly we can disallow an edge by removing the edge from the list of possible edges. Removing an edge in my case sets the cost of an edge to Inf.

The greedy approach is a bit more complex as we sometimes need to backtrack. We start with an edge that we want to include in our tour and at a later stage we might figure out that we don't have points left that we can visit from a point for which we have a lot of disallowed edges. You can read more about finding an actual tour that fulfills our constraints by searching for Hamiltonian cycle/path. That problem of finding a tour is already NP-complete so it doesn't really make sense for us to solve it till the end just to find an upper bound.

Additionally we still want to make sure that we find a somehow greedy tour and not just any tour. In my current approach I simply give up searching for a tour after 1,000,000 backtracks. It's also important to figure out when no such tour can exist or more precisely no such tour that actually uses all the edges we fixed.

The greedy function now has three different types of result.

We found a greedy tour

We know that there is no tour which uses all the fixed edges

We just gave up our search but there might exist a tour

With this information we can actually use Bonobo to solve a TSP problem.

Bonobo.jl

Bonobo is aimed as a general branch and bound framework so we need to specify how we evaluate each branching node as well as which branching variable we are choosing and which new branching nodes we create.

Bonobo itself is also my project and quite new so let this post be some kind of tutorial on how to use it for your own project.

First of all we need to specify some kind of root object which holds the general information about our problem. In our case it's a weighted undirected graph and I use the MetaGraphs for the creation.

I've also changed my reading .tsp parser a bit to return a MetaGraph but feel free to check that code in my TSPSolver repository.

Internally however I work with a cost matrix for the 1-tree lower bound which is the reason why my Root structure stores both.

struct Root

g::MetaGraph

cost::Matrix{Float64}

function Root(g::MetaGraph)

cost = zeros((nv(g), nv(g)))

for i in 1:nv(g)

for j in i+1:nv(g)

weight = get_prop(g, i, j, :weight)

cost[i,j] = weight

cost[j,i] = weight

end

end

new(g,cost)

end

endNext we need to figure out what kind of information we want to store in each branching node. We somehow need to store which edges we want to fix and which we want to disallow. This is important to evaluate the lower and upper bound. After the evaluation we need to store the tour and the 1-tree.

mutable struct Node <: AbstractNode

std :: BnBNodeInfo

tour :: Union{Nothing, Vector{Int}}

mst :: Union{Nothing, Vector{Edge{Int}}}

fixed_edges :: Vector{Tuple{Int,Int}}

disallowed_edges :: Vector{Tuple{Int,Int}}

endIt is also important for Bonobo that we have the std field with type BnBNodeInfo and that our struct is of type AbstractNode.

For this to work you have to have Bonobo installed and have it imported with using Bonobo.

Then we need to define our ids of branching variables such that Bonobo can decide which variable to branch on. Though in our case we don't really work with variables to branch on. Well we kinda branch on an edge but we can define our branching variable ourselves to the value we return isn't important it just needs to exist for now. 😄

function Bonobo.get_branching_indices(root::Root)

return 1:1

endNow let's start with the optimize call of the TSPSolver.

function optimize(input_path)

g = simple_parse_tsp(input_path)

bnb_model = Bonobo.initialize(

traverse_strategy = Bonobo.BFS,

branch_strategy = LONGEST_EDGE,

Node = Node,

root = Root(g),

sense = :Min,

Value = Vector{Int}

)

# more to come in here...

endWe read our graph from a .tsp file and initialize the branch and bound data structure. We specify that we want the BFS traverse strategy and use the longest edge as our branching variable. For this we need to create a struct:

struct LONGEST_EDGE <: Bonobo.AbstractBranchStrategy endThen we define that our node type is the created Node and our root is the specific graph and its cost of the .tsp file we want to solve. As we want to minimize the tour cost we set sense = :Min and our tour is a vector of integer we also specify that type.

Next we need to specify the value of the root problem.

Bonobo.set_root!(bnb_model, (

tour = nothing,

mst = nothing,

fixed_edges = Vector{Tuple{Int,Int}}(),

disallowed_edges = Vector{Tuple{Int,Int}}()

))We tell it that we haven't found a tour or minimum spanning tree (actually a 1-tree) yet and we don't want to fix or disallow edges yet.

Before we call the Bonobo.optimize!(bnb_model) function we need to implement a couple of more methods.

evaluate_node!to evaluate the current node and return a lower and upper bound for this branchget_relaxed_valuesto return the values for branching and for a solution.We want to simply return our

tourhere

get_branching_variableWe need to implement this ourselves as we don't work with standard floating point variables where Bonobo would check if they are already discrete.

get_branching_nodes_infoto create new branching nodes

Let's start with the easy one (2.)

function Bonobo.get_relaxed_values(tree::BnBTree{Node, Root}, node::Node)

return node.tour

endThe BnBTree is parameterized by the node type the root type and some more to make it easy to dispatch on. We should specify our own types there to not do any kind of type piracy.

Now the evaluate_node! functionality. The parameters are tree::BnBTree and the current node that we want to evaluate.

function Bonobo.evaluate_node!(tree::BnBTree{Node, Root}, node::Node)

...

endIn that function we first want to fix and disallow our edges for this specific node without chaning up our root node.

root = deepcopy(tree.root)

extra_cost = 0.0

for fixed_edge in node.fixed_edges

extra_cost += fix_edge!(root, fixed_edge)

end

for disallowed_edge in node.disallowed_edges

disallow_edge!(root, disallowed_edge)

endThen we compute our lower and upper bound:

mst, lb = get_optimized_1tree(root.cost; runs=50)

tour, ub = greedy(root.g)The extra cost of fixing edges need to be added back to the lower and upper bound values as we set their cost to 0 in the fix_edge! call.

lb += extra_cost

ub += extra_costThen we set our node values:

node.mst = mst

node.tour = tourThen when no tour can exist the heuristic returns Inf as the upper bound. In that case both lower and upper bound shall be NaN such that Bonobo kills that branch.

# no tour can exist

if isinf(ub)

return NaN, NaN

endOtherwise we simply return our bounds:

return lb, ubNow we need to decide for a variable/edge to branch on. We do that with the get_branching variable function.

function Bonobo.get_branching_variable(tree::BnBTree{Node, Root}, ::LONGEST_EDGE, node::Node)

longest_len = 0.0

longest_edge = -1

for edge in node.mst

edge_tpl = (edge.src, edge.dst)

if !(edge_tpl in node.fixed_edges) && !(edge_tpl in node.disallowed_edges)

len = get_prop(tree.root.g, edge_tpl..., :weight)

if len > longest_len

longest_edge = edge

longest_len = len

end

end

end

return longest_edge

endHere the second argument defines the branching strategy where we defined that we want to use LONGEST_EDGE which is our own strategy.

It basically goes through all edges in the minimum spanning tree and checks whether there is an edge which is not yet fixed and not yet disallowed. From all these edges it return the longest one. Don't ask me whether that makes any actual sense but it is nevertheless a strategy.

No worries I'll come to optimizing this TSPSolver at a later stage.

The last function we need to specify is get_branching_nodes_info which returns the information about new nodes that we want to create. Til now we only evaluated the current node and decided which edge we want to branch on but we now have to tell Bonobo which new subproblems we want to create.

At this stage we already know the edge we want to branch on so we simply need to fix that edge in one branch and disallow it in the other.

function Bonobo.get_branching_nodes_info(tree::BnBTree{Node, Root}, node::Node, branching_edge::Edge)

nodes_info = NamedTuple[]

new_fixed_edges = deepcopy(node.fixed_edges)

push!(new_fixed_edges, (branching_edge.src, branching_edge.dst))

push!(nodes_info, (

tour = nothing,

mst = nothing,

fixed_edges = new_fixed_edges,

disallowed_edges = deepcopy(node.disallowed_edges),

))

new_disallowed_edges = deepcopy(node.disallowed_edges)

push!(new_disallowed_edges, (branching_edge.src, branching_edge.dst))

push!(nodes_info, (

tour = nothing,

mst = nothing,

fixed_edges = deepcopy(node.fixed_edges),

disallowed_edges = new_disallowed_edges,

))

return nodes_info

endWe can't directly return the nodes as Bonobo needs to inject some of its own magic into it like saving the node ids and lower and upper bound. Therefore we return a vector of NamedTuple which stores the information about the new nodes.

Now we can go back to our optimize method and call:

Bonobo.optimize!(bnb_model)As an example I can call:

bnb_model = TSPSolver.optimize(joinpath(module_path, "../test/data/berlin52.tsp"));where the test file is from here berlin52.tsp.

Currently it takes my solver roughly 0.4s to solve this problem.

Benchmark against TravelingSalesmanExact.jl

My first TSP experience was a while back where I solved them using a MIP approach and the TravelingSalesmanExact.jl was inspired by that blog post.

Therefore I find it interesting how this other very basic branch and bound approach compares to the MIP approach at this stage.

using TravelingSalesmanExact

using HiGHS # as I don't have access to Gurobi anymore

set_default_optimizer!(HiGHS.Optimizer)

cities = simple_parse_tsp("berlin52.tsp");

tour, cost = get_optimal_tour(cities)And the result actually brought me quite some joy 😂 The MIP approach is currently about 10x faster than my new TSPSolver .

Though this is of course just more motivation to improve it.

julia> @benchmark bnb_model = TSPSolver.optimize(joinpath(module_path, "../test/data/berlin52.tsp"))

BenchmarkTools.Trial: 13 samples with 1 evaluation.

Range (min … max): 394.385 ms … 421.570 ms ┊ GC (min … max): 7.74% … 10.08%

Time (median): 406.340 ms ┊ GC (median): 8.62%

Time (mean ± σ): 406.939 ms ± 7.114 ms ┊ GC (mean ± σ): 8.56% ± 0.78%

▁ ▁ ▁ ▁ ▁ █ █▁ ▁ ▁ ▁

█▁▁▁▁▁▁▁▁▁▁▁█▁▁█▁▁█▁▁▁▁█▁▁█▁▁██▁▁▁▁▁▁▁▁▁▁▁▁█▁▁▁▁█▁▁▁▁▁▁▁▁▁▁▁█ ▁

394 ms Histogram: frequency by time 422 ms <

Memory estimate: 586.61 MiB, allocs estimate: 1734393.julia> @benchmark tour, cost = get_optimal_tour(cities)

BenchmarkTools.Trial: 82 samples with 1 evaluation.

Range (min … max): 52.062 ms … 77.058 ms ┊ GC (min … max): 0.00% … 9.20%

Time (median): 58.953 ms ┊ GC (median): 0.00%

Time (mean ± σ): 61.294 ms ± 5.591 ms ┊ GC (mean ± σ): 1.58% ± 3.50%

▂ ▂█ ▂

▅▄▁▁▁▁▄▄▅█▄▇▅▄████▁▅▄▁▁▄▄▁▇▄▅▁▁█▅▄█▄▅▄▄▁▄▄▄▅▁▁▄▁▁▁▁▄▁▄▄▅▁▁▄ ▁

52.1 ms Histogram: frequency by time 74.2 ms <

Memory estimate: 10.19 MiB, allocs estimate: 130481.Future ideas

I hope you enjoyed this post and learned something about solving travelling salesman problems and using Bonobo for your own branch and bound problems. The memory consumption is quite a bit higher for the TSPSolver which I think is a good place to start investigating how to make it faster.

Additionally I saw that the lower bound at the beginning is quite close to the actual solution. It starts with a lower bound of ~7530 and an upper bound of ~8981. The actual solution is ~7544.4 which you can get with:

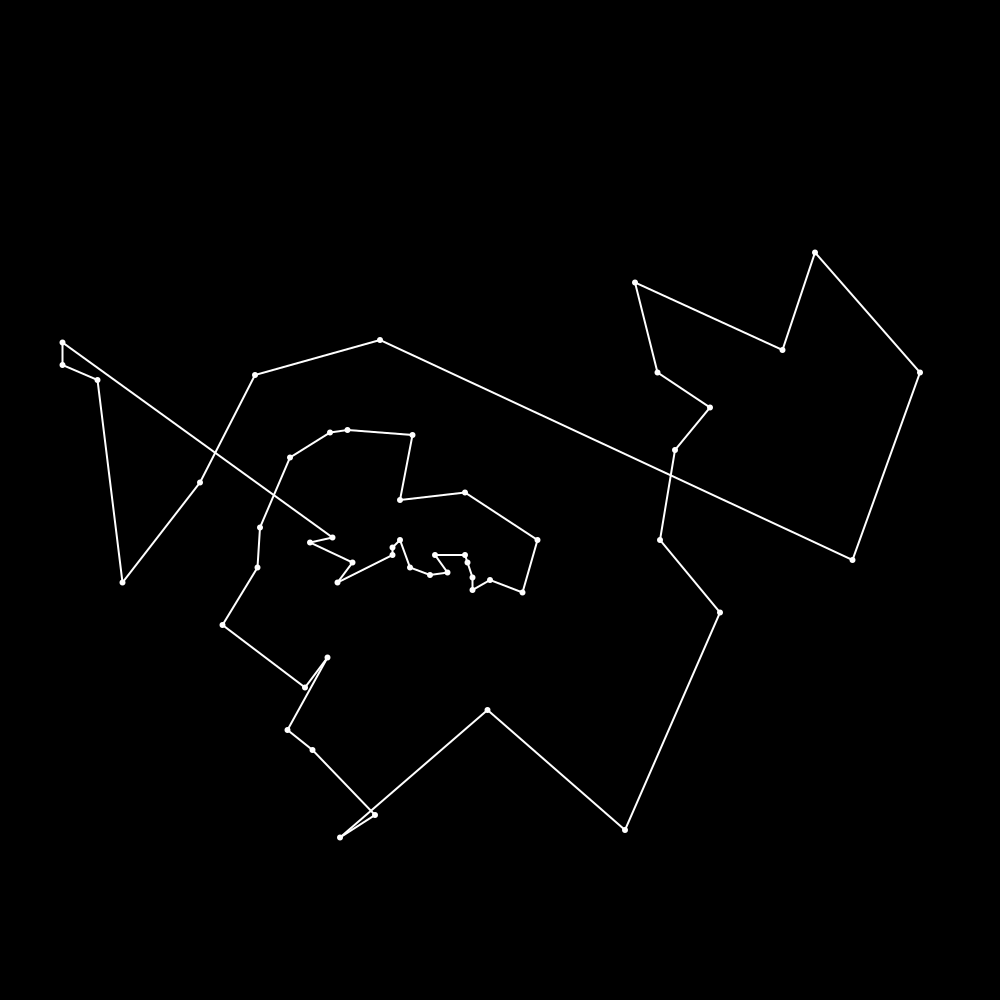

Bonobo.get_objective_value(bnb_model)Therefore I think we can focus next time a bit more on finding a better upper bound. I think you would agree that this doesn't look close to optimal. It's not the worst but it has definitely room for improvement.

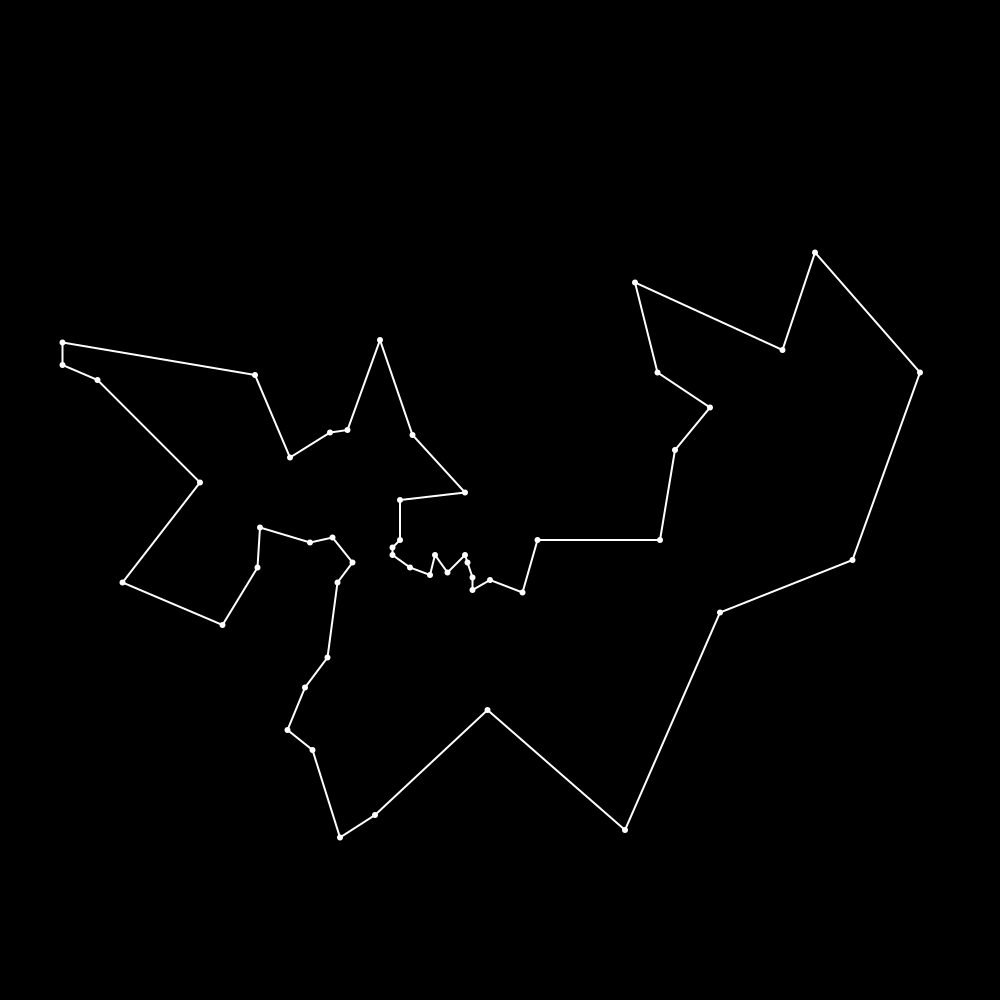

This is the optimal tour:

Therefore I've added 2-opt in my next post as well as some other things to improve the performance.

If you want to be informed when I release the next post please consider subscribing to my email newsletter.

Additionally you might want to consider joining my most supportive fan group on Patreon. Then you'll be the first to be able to read this post!

Special thanks to my 10 patrons!

Special special thanks to my >4$ patrons. The ones I thought couldn't be found 😄

Anonymous

Gurvesh Sanghera

Szymon Bęczkowski

Colin Phillips

For a donation of a single dollar per month you get early access to these posts. Your support will increase the time I can spend on working on this blog.

There is also a special tier if you want to get some help for your own project. You can checkout my mentoring post if you're interested in that and feel free to write me an E-mail if you have questions: o.kroeger <at> opensourc.es

I'll keep you updated on Twitter opensourcesblog.

Want to be updated? Consider subscribing on Patreon for free