Neuromorphic computing: Hardware

Creation date: 2019-08-05

About two months ago I wrote a blog about neuromorphic computing which you probably want to read first if you haven't already. Two weeks ago I gave a talk in a university seminar about it and I mentioned that I want to dive a little bit deeper into the hardware used for neuromorphic computing and implementing a spiking neural network myself in Julia.

Unfortunately this isn't the post about the spiking neural network yet (just some small comments on what I've tried yet).

Nevertheless first start with the hardware: The goal is to build a computer system which functions more like the brain than what artificial neural networks do. One key component is that it uses spikes for communication and time dependency is a critical thing. In this post I'll talk mostly about one simulation approach which is SpiNNaker which is developed at the university of Manchester and the emulation approach HiCANN. Shortly mention TrueNorth and Loihi for comparison but will not dive deeper into their implementation here.

First of all what do I mean by simulation vs emulation? Simulating uses more conventional hardware which as mentioned in the previous post has the disadvantage of using more power and being slower in a sense that it's not well constructed for the task but it works if we just use more hardware. Emulation is the way of actually implementing the neuron model in hardware. This can be done in an analog or in a digital fashion but compared to simulation its hardware is specialized to the task instead of mainly the software.

I think starting with SpiNNaker makes quite some sense as it's easier to understand.

SpiNNaker

Since 2018 SpiNNaker is able to simulate theoretically 1% of the human brain. By theoretically I mean: We have like no clue how to actually use it like how should the system learn and do something really useful. I wasn't able to find any publication about neuroscience advancements which use any of the systems I mention here which is quite disappointing :/

So 1% of the human brain are 1 billion neurons. Now how do they do it: First of all they use SpiNNaker boards of 48 ARM chips which each have 18 cores and each core simulates 1,000 neurons in biological real time. The chips have a fairly low clock speed of 200MHz. The communication works in a way that it's globally asynchronous and locally synchronous which means that there is no synchronization between the chips. If a neuron spikes which is handled on one of the cores it has to communicate this to connected neurons. There are different possibilities here:

Are the connected neurons represented by the same core?

on the same chip

on the same board

somewhere else?

The first two are quite simple as there is no network communication needed. For the other ones it's possible to send a packet over the network which contains the source and the destination address or all connections to the other neurons which could be quite big or as it is actually handled is that it only contains the source of the spike and every chip has a routing table which knows how this neuron is connected to the other ones and sends the packet in the right direction. The spike can be copied in the last possible moment in this fashion to reduce the needed bandwidth.

A quite interesting thing is that packets might just get dropped if they are too old so if a spike is moving in the network and never reaches its destination (livelock) it just gets discarded and there is no resend mechanism which somehow makes sense I suppose as everything is running in real time so if we resend a packet it has the wrong time anyway and nobody can wait for it.

You might see some downsides and upsides in this approach:

It is highly customizable as everything is done in software and if a new neuron model seems to be much better than it just needs to be changed in the software but the communication probably stays the same. The communication to other chips takes time much like the connections in the brain have different length and it takes a different amount of time there as well to send a message from one neuron to a different one. How about dropping a spike? Does this ruin the network? Not sure how often this happens in SpiNNaker so it's hard to tell. It uses conventional hardware with the von Neumann approach so it is not the most power efficient but maybe we should care about other things first like making it actually useful for understanding processes in the brain and rebuild them to get a learnable system.

HiCANN

The HiCANN chip is developed at the university of Heidelberg where I currently study Computer Science. I mentioned some of the hardware in the previous post and just want to add some things here that I found out later:

The neuron has two input connections, a spiking output to neighboring neurons and to the network as well as a capacitor we mentioned before to integrate, a leaky unit and some special parts for their more complicated model.

Two neurons are connected via synapses but they aren't physically connected (predefined) which wouldn't make much sense as we want to program the chip. Instead each synapse has a bit code (here 4 bits) which is used to activate the synapse for communication. If this bit code is transmitted the synapse knows that it has to do some work. Work means it uses a different short bit code (here 4 bits) which defines its weight and converts this into a current which is the input for the postsynaptic neuron.

As mentioned in the brain section we have excitatory and inhibitory inputs which increase and decrease the potential of a neuron respectively which is the reason why the neuron here has two input connections. A synapse can be deactivated if the weight is set to 0.

They mention that they connected many of this so called analog network cores together and achieved to get 40,000,000 synapses and 180,000 neurons on one wafer which can be connected to bigger units. Unfortunately it isn't really mentioned what they have done with it in this paper.

For a comparison the human brain has about 100,000,000,000 neurons which is more than 500,000 times that much and each neuron can have up to 15,000 connections. In this hardware model one neuron can have up to 14,000 connections via synapses which is close to our brain.

This 14,000 connections are done by connecting several neurons into one. Normally each neuron has 224 input connections but by combining them this can be increased to about 14,000. This is a functionality I wasn't able to find in TrueNorth and Loihi for example.

Unfortunately I wasn't able to find out how much power this chip consumes but I'll add it here after I find it out. They mention that it reduces the power consumption by several orders of magnitude which isn't a very precise statement. Their speed up factor is about 1,000 which means that a year can be simulated in about 9 hours.

I will show the power consumption in a second in a comparison table and unfortunately I found out that the 1,000 times faster than biological real time is only for inference but not for updating the weights to make the system learnable. This can be done but takes some more time.

TrueNorth and Loihi

TrueNorth from IBM and Loihi developed by Intel are the biggest two commercial chips. They use a digital system instead of the analog system HiCANN uses but they are emulating the brain as well. Their goal is mainly the power consumption. For my 30 min talk I focused mainly on SpiNNaker and HiCANN which I do here as well as SpiNNaker is special in my opinion as it really has a huge number of artificial neurons and is flexible and on HiCANN of course at it is developed in Heidelberg.

For TrueNorth and Loihi I wanted to point out that they both have the possibility of delaying spikes which means that they can emulate the different length of axons between neurons. I'm not sure whether HiCANN has this functionality but I wasn't able to find something about it. Now why is this useful? It makes something like coincidence detection in the hearing system possible. For humans and other animals this means we are able to hear from which direction some sound is coming as our two ears hear the same sound at slightly different times. (It's quite useful that sound travels much slower than light, right? ;) ) This is visualized in this YouTube video:

This makes it necessary that the global system is synchronous in comparison to SpiNNaker so that after each time step of sending spikes out the system needs to synchronize itself to jump to the next timestamp. They achieve this using barrier synchronization messages as otherwise they would need to wait always until they can be sure that everyone processed the last session of spikes in a worst case manner. This way they can communicate that everyone is done.

Comparison

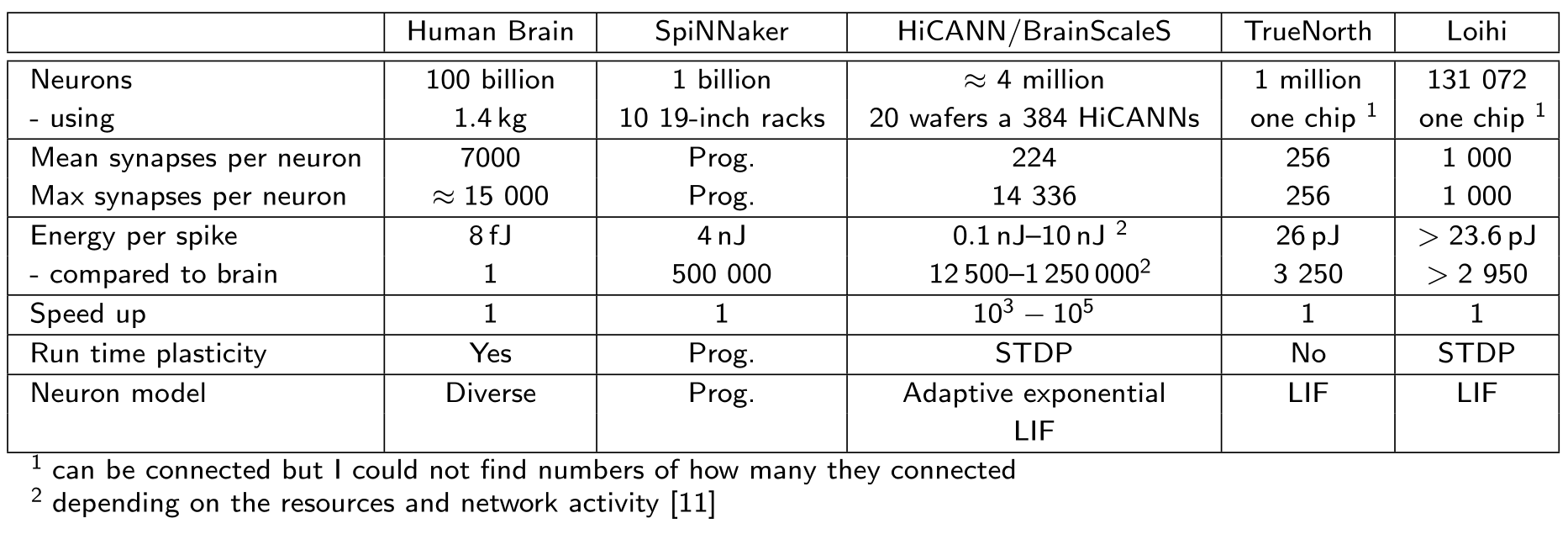

I want to compare the four different systems here.

As you can see SpiNNaker is the most customizable approach which can easily adapt to new advancements in neuroscience but it also uses the most energy per spiking event. HiCANN is the only system which is faster than biological real time but as mentioned currently only during the inference stage and not for learning and it uses a more flexible leaky integrate and fire neuron model which can adapt was we know that the brain is doing it. It's hard to set the parameters right here but at least it is possible compared to the systems developed by IBM and Intel which still work with the most basic neuron model. They also don't have the ability to combine several neurons together to be able to have more inputs but they achieve their goal of being quite power efficient compared to SpiNNaker and HiCANN but are still far away from the low power consumption observed in the biological equivalent.

General remarks

I'm sorry for the people who wanted to get more detail on the different systems but I would suggest reading the papers in that case. This posts should just give an overview over neuromorphic computing and different hardware approaches. I hope I was able to teach you something with the last three posts about neuroscientific topics:

Introduction to Neuromorphic computing Recap of the Live stream about Neuralink

Literature:

I am still reading the autobiography of Eric Kandel In Search of Memory which is an interesting read and not too hard to read for newcomers into the field like myself at least the first half of the book. Maybe someone of you is interested in How to build a brain. I didn't find it that easy to read but maybe I'll give it another shot.

Outlook

I almost finished my exams for this semester only one left in about a week so I tried to give it a shot to implement spiking neural networks by myself in Julia and I gave myself the challenge to try out ideas as much as possible without reading how it should be done. This might result in a total failure but I don't want to "restrict" my brain by first reading how some other people implemented it or what the actual ideas behind it are.

My current approach is not much more specified than: If a presynaptic neuron spikes and shortly afterwards the neuron itself then we strengthen the connection and if the neuron spikes but the presynaptic doesn't than the connection doesn't seem to be that useful so we loosen the connection. There are some obvious questions like: How much should they be strengthen or loosen? Does this depend on the time difference? Is there a learning rate?

I also experiment with some different strategies like directly learning in each time step or having an updating step which seems to be easier to implement. Some other things that will be interesting later on is to make it parallel maybe running things on the GPU? But definitely trying the new thread interface in Julia 1.3.

That's it for this post and I'll keep you updated on Twitter OpenSourcES or if you enjoy Reddit you can subscribe to my subreddit.

Thanks for reading!

If you enjoy the blog in general please consider a donation via Patreon. You can read my posts earlier than everyone else and keep this blog running.

Want to be updated? Consider subscribing on Patreon for free